Deathtrap: Overlearning in Predictive Analytics

As I mentioned in my last blog post, I am in the process of gathering survey data for the TDWI Best Practices Report about predictive analytics. Right now, I'm in the data analysis phase. It turns out (not surprisingly) that one of the biggest barriers to adoption of predictive analytics is understanding how the technology works. Education is definitely needed as more advanced forms of analytics move out to less experienced users.

With regard to education, coincidentally I had the pleasure of speaking to Eric Siegel recently about his book, Predictive Analytics: The Power to Predict Who Will Click, Buy, Lie, or Die (www.thepredictionbook.com). Eric Siegel is well known in analytics circles. For those who haven’t read the book, it is a good read. It is business focused with some great examples of how predictive analytics is being used today.

Eric and I focused our discussion on one of the more technical chapters in the book that addresses the problem known as overfitting (aka overlearning)—an important concept in predictive analytics. Overfitting occurs when a model describes the noise or random error rather than the underlying relationship. In other words, it occurs when your data fits the model a little too well. As Eric put it, "Not understanding overfitting in predictive analytics is like driving a car without learning where the brake pedal is."

While all predictive modeling methods can overlearn, a decision tree is a good technique for intuitively seeing where overlearning can happen. The decision tree is one of the most popular types of predictive analytics techniques used today. This is because it is relatively easy to understand—even by the non-statistician—and ease of use is a top priority among end users and vendors alike.

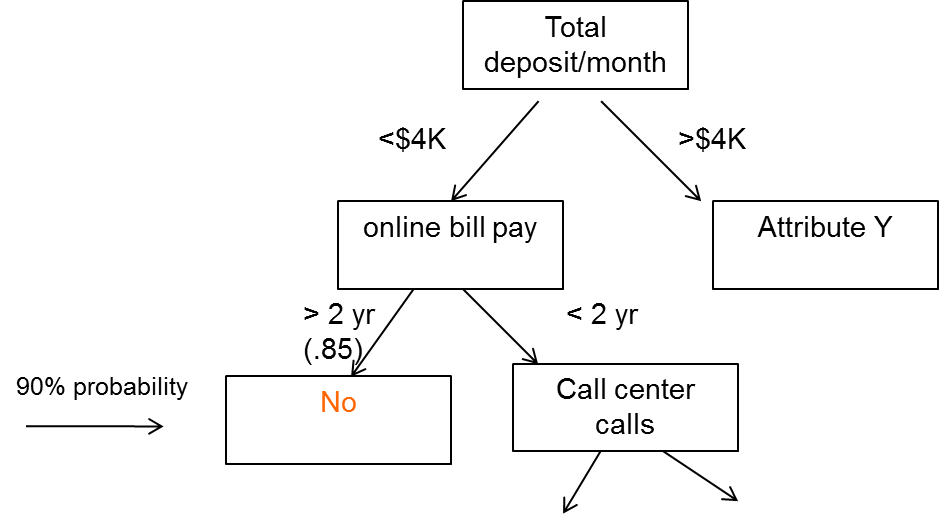

Here's a simplified example of a decision tree. Let's say that you're a financial institution that is trying to understand the characteristics of customers who leave (i.e., defect or cancel). This means that your target variables are leave (yes) and don't leave (no). After (hopefully) visualizing or running some descriptive stats to get a sense of the data, and understanding the question being asked, the company puts together what's called a training set of data into a decision tree program. The training set is a subset of the overall data set in terms of number of observations. In this case it might consist of attributes like demographic and personal information about the customer, size of monthly deposits, how long the customer has been with the bank, how long the customer has used online banking, how often they contact the call center, and so on.

Here's what might come out:

The first node of the decision tree is total deposit/month. This decision tree is saying that if a customer deposits more than $4K per month and is using online bill pay for more than two years, they are not likely to leave (there would be probabilities associated with this). However, if they have used online banking for less than two years and contacted the call center X times, there may be a different outcome. This makes sense intuitively. A customer who has been with the bank a long time and is already doing a lot of online bill paying might not want to leave. Conversely, a customer who isn't doing a lot of deposits and who has made a lot of calls to the call center might be having trouble with the online bill pay. You can see that the tree could branch down and down, each branch with a different probability of an outcome, either yes or no.

Now, here's the point about overfitting. You can imagine that this decision tree could branch out bigger and bigger to a point where it could account for every case in the training data, including the noisy ones. For instance, a rule with a 97% probability might read, "If customer deposits more than $4K a month and has used online bill pay for more than two years, and lives in ZYX, and is greater than 6 feet tall, then they will leave." As Eric states in his book, "Overlearning is the pitfall of mistaking noise for information, assuming too much about what has been shown in the data." If you give the decision tree enough variables, there are going to be spurious predictions.

The way to detect the potential pitfall of overlearning is apply a set of test data to the model. The test data set is a "hold out"; sample. The idea is to see how well the rules perform with this new data. In the example above, there is a high probability that the spurious rule above won't pan out in the test set.

In practice, some software packages will do this work for you. They will automatically hold out the test sample before supplying you with the results. The tools will show you the results on the test data. However, not all do, so it is important to understand this principle. If you validate your model using hold-out data, then overfitting does not have to be a problem.

I want to mention one other point here about noisy data. With all the discussion in the media about big data, there has been a lot said about people being misled by noisy big data. As Eric notes, "If you checking 500K variables you'll have bad luck eventually—you'll find something spurious." However, chances are that this kind of misleading noise is from an individual correlation, not a model. There is a big difference. People tend to equate predictive analytics with big data analytics. The two are not synonymous.

Are there issues with any technique? Of course. That's why education is so important. However, there is a great deal to be gained from predictive analytics models, as more and more companies are discovering.

Posted by Fern Halper, Ph.D. on September 11, 2013