Generative AI Enthusiasm Versus Expertise: A Boardroom Disconnect

Members of corporate boards may overestimate their generative AI expertise and instead should be on a learning curve with the technology to ensure responsible adoption.

- By Asa Whillock

- March 4, 2024

Generative AI is moving the needle everywhere you look across the enterprise, and it's in the top five for what will be most impactful over the next three years. Many business leaders have swiftly (and correctly) identified major benefits of generative AI, such as increased market competitiveness, improved security, and enhanced product innovation. Board members are seeing this, too. A recent survey of 300 board members conducted by Alteryx (the company I work for) revealed that 46% see generative AI as the “main priority above anything else” for their board right now.

The increase in interest and relative strategic importance of generative AI is welcome and organizations should dig deep into the ins and outs of the technology, assess potential risks, and seek industry guidance to adopt the technology responsibly. However, one surprising finding from the survey shows this might not be happening.

Overconfidence in Existing Skills

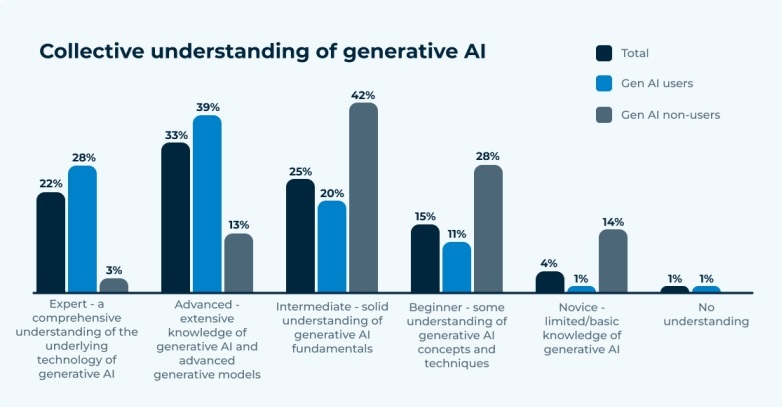

Many board members surveyed appear to be vastly overrating their understanding of generative AI, particularly those members based in the U.S. and working in the retail sector. For example, when board members in companies already using generative AI were asked about their board’s “collective understanding and knowledge” of generative AI:

- 28% said their board possesses an expert level of knowledge, including a comprehensive understanding of the underlying technology of generative AI.

- 39% said they are advanced, with extensive knowledge of generative AI and advanced generative models.

- 20% said their board possesses intermediate knowledge of generative AI.

- The remaining 13% said their board is either at a beginner or novice level or has no understanding of generative AI at this time.

To be fair, over-confidence could stem from the fact that using generative AI is mostly an intuitive and user-friendly process. However, the incredibly confident 67% who believe their board is “advanced” or “expert” regarding generative AI may represent a disconnect with reality. Being an expert in a technology has particular meaning -- often requiring a decade or more of study in a complex system and multiple degrees or certifications. The prize for all that time is unparalleled scientific insight into how that system functions. There's no substitute to spending that time exploring something so groundbreaking.

The implication that board members are experts, given there has been so little time to truly understand the topic, suggests that they’re more likely “sophomores.” In etymology, sophomore means “the wise fool” -- someone who has spent enough time on a topic to feel that they know more than a complete newcomer, but hardly an expert.

This claimed expertise is even more likely merely shallow understanding when one considers that even data science and data leaders are signaling their own lack of expertise. It shows that board members are yet to break through to a deeper plane of understanding beyond the sophomore jinx.

Consider this: In a Forbes article (“Are Boards Kidding Themselves About AI?”), author and professor Tom Davenport wrote, "I doubt that 29% of even formally-trained computer scientists fully understand the underlying transformer models that make generative AI work. I have been studying them for a couple of years and wouldn’t put myself in the expert category.”

The Stakes of Getting Generative AI “Right” Right Now

Figure 1. What is your assessment of the collective understanding and knowledge the board of directors in your organization has of generative AI? (Source: "New Research: What Boardroom Leaders Think About Generative AI")

What makes these results so important is that each company surveyed will soon make critical decisions related to generative AI. Getting it right in these relatively early stages will multiply exponentially into the future -- as will getting it wrong.

However, a board -- or anyone who overrates their grasp of generative AI -- is far more likely to make big calls without consulting internal or external experts. When looking strictly at surveyed companies who are using generative AI now, an eye-popping 70% of board members believe the board has the knowledge to make informed strategic decisions without outside help. The numbers change radically when we look at companies that aren’t yet using generative AI; 83% of these board members say their knowledge is not on a level where they could make unaided strategic decisions.

Just as AI solutions should reflect the experiences of a diverse spectrum of individuals to ensure fairness and inclusivity, leaders should seek input from a variety of sources -- internal and external -- before making technology decisions regarding generative AI.

Educating “Experts” About Risk

Educating business leaders and stakeholders -- including those who self-identify as experts -- will be key for companies in the coming months and years. Analytics and AI experts will need to find better ways to inform key decision-makers about generative AI. That means going beyond the surface to convey an understanding of the underlying technologies, too.

Adopting Generative AI Responsibly

Companies that are serious about adopting generative AI across their entire organization must ensure they have the mechanisms to manage risk and adopt the technology responsibly. It isn’t enough for companies to create and implement a governance plan -- they must then expend the energy to enforce the guidelines they have implemented. Otherwise, companies can fall into the trap of making these and other IT policies pointless, opening the door to even greater vulnerabilities and exposure.

How can organizations adopt generative AI with confidence? Hint: It takes more than just feeling good about their decisions based on their perceived expertise. Here are six principles and questions to consider. Each may take more than one dedicated expert to make sure AI is being adopted responsibly:

- Can you and your customers understand the outcomes produced by your AI usage? Transparency and explainability are key to assessing the integrity and accuracy of AI outcomes.

- Do you have robust mechanisms in place to enable human oversight? Balancing AI's capabilities with human judgment remains crucial.

- Are you taking proactive measures to prioritize privacy and security? Security, privacy, and trust are intertwined and necessary to maintain the integrity of AI solutions and outcomes.

- How do you make sure your AI is helping users achieve thorough, accurate, and intended outcomes? Reliability and safety are essential to creating responsible outcomes.

- How do you identify, address, and mitigate AI bias? You need to consistently seek to improve your ability to identify and address bias in your models and training data throughout the development life cycle.

- Are you considering the potential societal implications of using AI for a given purpose? AI should enable progress and empower socially responsible change .

With these principles and buy-in from a wide swath of your employee base, your organization can effectively democratize generative AI. With a board that recognizes generative AI knowledge gaps and does the work to be called “expert,” companies can set themselves on a course for success.

In the meantime, leaders can capitalize on this board enthusiasm to help spread awareness of generative AI's importance and influence funding sources within the company. One key message to convey will be the importance of democratizing the technology’s place within the organization so as many people as possible can unlock its value.